最近看了不少人评价AlphaStar10:0战胜人类SC选手TLO和MaNa,各种评论都有,周五下载了StarCraftII,可以免费下载,但是有25GB,要下载好一会儿。逐个打开了DeepMind网站的游戏录像研究,一共11个,其中10个是AlphaStar获胜的录像,一个是MaNa获胜的录像。我不是SCII玩家,但是对SC还是颇有心得的,虽然水平不高,但是深知获胜的关键。仔细研究了10:0的录像,然后又阅读了一下AlphaStar的博文,虽然现在还有机会胜AI,但是长远看,感觉AlphaStar根本没法战胜。但是原因值得研究。

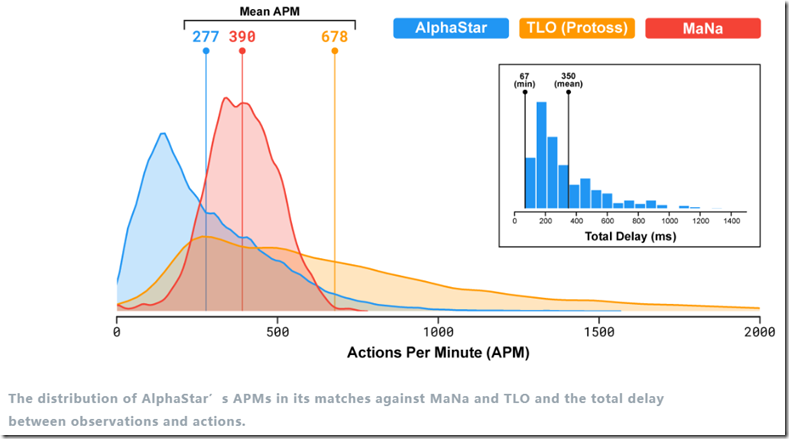

引文1分析了人和AI的APM(Action Per Minute),人类最高也就500,AI可以达到1500。最高水平的SC2玩家Serral的EAPM(有效APM)可以达到344,认为这是Serral多次获胜的原因。换言之,能否在SC2获胜是依赖于APM的,因此引文1得出结论:不需要高明的战术和战略,仅靠APM,AI打SC2就可以完爆人类。

去年在研究SC的AI时,得出的结论是AI没法在RTS游戏中获得胜利。这和引文1得出的结论是不同的,到底有原因是什么,大家可以看看我后面的分析,以及引用的AlphaStar博文。

先说观点:AI需要掌握战术,特别是要掌握大量的战术,然后配合APM,才能获得胜利。没有智商,有APM也没用。至于说战略,我个人无法判断现在的AI是否有战略,毕竟,你可以说一组规则是战术,也可以说是战略。

一、录像回放

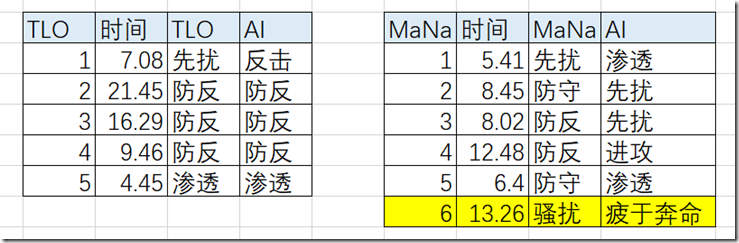

需要指出,MaNa坚持的时间比TLO短,并不代表MaNa的水平差,而是MaNa看到大势已去就GG了,而TLO一般会坚持到势不可解。比较有意思的是,TLO和MaNa刚刚和AI接触的时候,都会尝试相对激进的攻击战术,但是均死的很惨,比如MaNa的第一局:MaNa首先进攻AI,结果被AI渗透以后,直接攻入老巢,最后男女老少一起上,还是被AI消灭了,后面MaNa尝试了很多种神族常用的攻击方式,但是均被AI守住并消灭,直到MaNa发现了AI的一个bug。

二、操作(APM)制胜

从录像回放中可以非常清楚,不论是否采用法术:在野战的时候,AI总是以少胜多,从而获得最终的胜利。大家需要了解一点,如果在野战中,一方活下来几个兵,这几个兵杀入对方总部,基本上就可以取得胜利,也就是说,局点基本上就是赛点。但是要注意,能否进入野战阶段不是APM决定的,而是要利用战术。

三、战术配合

除了操作获胜之外,AI是很有战术的,这些战术或者和布局相关、或者和某个兵种的法术相关(这一点,我认为是AlphaStar从以往的战局中学会的,AlphaStar团队拥有最大的战例数据库。当然,这离不开建模),下面是从录像中看到的几个战术,最后一个是MaNa发现AI战术的Bug,并加以利用击败AI的空投战术。

战术1:AI的战术非常直接了当,就是形成局部的优势,从上面航母大战看,TLO的部队比AI的更强悍,但是AI主动放弃2个基地,回到了有充能站和炮台的主基地等待TLO进攻,在混战中,AI的攻击总是会集中在TLO的受伤单元上,而人类的进攻在这种情况下,根本无法准确聚焦在一个单元上,同时AI的部队得到充能站的支持,基本上保持护盾,因此一场战斗下来,人类从199减少到109,而AI作战单元依然保持192(注:上述可能有新造的单元)

战术2:AI擅长使用一些人类玩家不屑于使用的法术,比如干扰器,干扰器可以形成大规模杀伤的功能,但是可能有某些缺点,但是AI应用非常娴熟,命中率高的时候,一下子可以消灭几个敌方作战单元

战术3:TLO和AI都采用了渗透战术,但TLO的渗透太明显了,直接被AI发现并摧毁。但是AI在TLO季度附近偷偷摸摸建设的基地发挥了效果,直接进攻了TLO的总部,TLO立马GG了

战术4:渗透+堵门战术,迅速攻入MaNa的总部

战术5:MaNa在最后一次战斗中,发现了AI的一个bug:MaNa用空降兵反复骚扰AI农民,导致AI准备好的进攻力量持续返回,而MaNa趁机组建了一支军队杀过来消灭了AI,这是MaNa唯一一次获得胜利,是在10:0之外的一次战斗

四、AlphaStar的博文

我摘录一下要点,由于没有翻译,基本上覆盖了大部分原文的内容,感兴趣的自己到DM看全文:https://deepmind.com/blog/alphastar-mastering-real-time-strategy-game-starcraft-ii/

1、AlphaStar plays the full game of StarCraft II, using a deep neural network that is trained directly from raw game data by supervised learning and reinforcement learning.

2、we worked with Blizzard in 2016 and 2017 to release an open-source set of tools known as PySC2, including the largest set of anonymised game replays ever released. We have now built on this work, combining engineering and algorithmic breakthroughs to produce AlphaStar.

3、AlphaStar’s behaviour is generated by a deep neural network that receives input data from the raw game interface (a list of units and their properties), and outputs a sequence of instructions that constitute an action within the game. More specifically, the neural network architecture applies a transformer torso to the units, combined with a deep LSTM core, an auto-regressive policy head with a pointer network, and a centralised value baseline. We believe that this advanced model will help with many other challenges in machine learning research that involve long-term sequence modelling and large output spaces such as translation, language modelling and visual representations.

4、AlphaStar also uses a novel multi-agent learning algorithm. The neural network was initially trained by supervised learning from anonymised human games released by Blizzard. This allowed AlphaStar to learn, by imitation, the basic micro and macro-strategies used by players on the StarCraft ladder. This initial agent defeated the built-in “Elite” level AI – around gold level for a human player – in 95% of games.

5、These were then used to seed a multi-agent reinforcement learning process. A continuous league was created, with the agents of the league – competitors – playing games against each other, akin to how humans experience the game of StarCraft by playing on the StarCraft ladder.

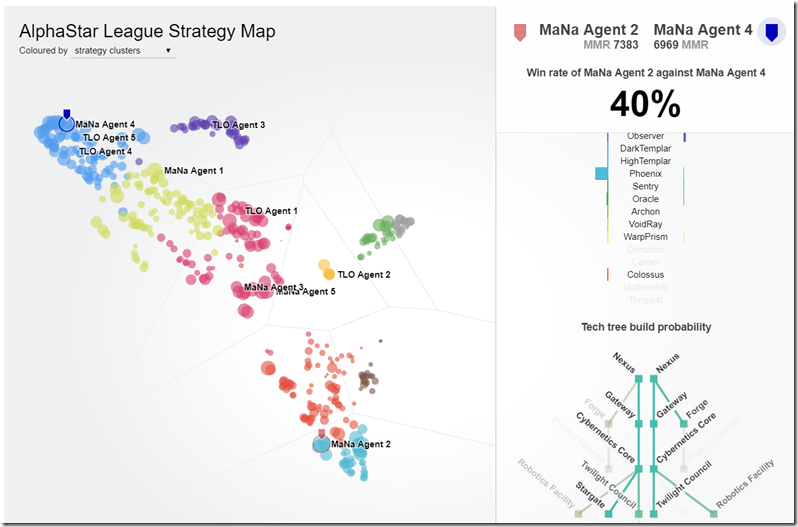

6、As the league progresses and new competitors are created, new counter-strategies emerge that are able to defeat the earlier strategies. While some new competitors execute a strategy that is merely a refinement of a previous strategy, others discover drastically new strategies consisting of entirely new build orders, unit compositions, and micro-management plans. For example, early on in the AlphaStar league, “cheesy” strategies such as very quick rushes with Photon Cannons or Dark Templars were favoured. These risky strategies were discarded as training progressed, leading to other strategies: for example, gaining economic strength by over-extending a base with more workers, or sacrificing two Oracles to disrupt an opponent’s workers and economy. This process is similar to the way in which players have discovered new strategies, and were able to defeat previously favoured approaches, over the years since StarCraft was released.【这一点在录像中确实可以看到,AI没有采用高风险的Quick Rush战术】

7、The neural network weights of each agent are updated by reinforcement learning from its games against competitors, to optimise its personal learning objective. The weight update rule is an efficient and novel off-policy actor-critic reinforcement learning algorithm with experience replay, self-imitation learning and policy distillation.

原文的这张图很有价值:可以动态展现2个Agent之间的胜负概率

8、In order to train AlphaStar, we built a highly scalable distributed training setup using Google’s v3 TPUs that supports a population of agents learning from many thousands of parallel instances of StarCraft II. The AlphaStar league was run for 14 days, using 16 TPUs for each agent. During training, each agent experienced up to 200 years of real-time StarCraft play. The final AlphaStar agent consists of the components of the Nash distribution of the league – in other words, the most effective mixture of strategies that have been discovered – that run on a single desktop GPU.

9、In its games against TLO and MaNa, AlphaStar had an average APM of around 280, significantly lower than the professional players, although its actions may be more precise. This lower APM is, in part, because AlphaStar starts its training using replays and thus mimics the way humans play the game. Additionally, AlphaStar reacts with a delay between observation and action of 350ms on average.

注意:这个图被引文1解读过,大体意思是MaNa的APM最高没有超过750,但是AI可以达到1500以上,而TLO采用了特殊的键盘和快键组合,没法同比

10、During the matches against TLO and MaNa, AlphaStar interacted with the StarCraft game engine directly via its raw interface, meaning that it could observe the attributes of its own and its opponent’s visible units on the map directly, without having to move the camera – effectively playing with a zoomed out view of the game. We trained two new agents, one using the raw interface and one that must learn to control the camera, against the AlphaStar league. Each agent was initially trained by supervised learning from human data followed by the reinforcement learning procedure outlined above.

11、Our agents were trained to play StarCraft II (v4.6.2) in Protoss v Protoss games, on the CatalystLE ladder map. To evaluate AlphaStar’s performance, we initially tested our agents against TLO: a top professional Zerg player and a GrandMaster level Protoss player. AlphaStar won the match 5-0, using a wide variety of units and build orders. “I was surprised by how strong the agent was,” he said. “AlphaStar takes well-known strategies and turns them on their head. The agent demonstrated strategies I hadn’t thought of before, which means there may still be new ways of playing the game that we haven’t fully explored yet.”【应该被震撼到了:AI居然在人类的总部建了一个能量塔,貌似无厘头,其实不然,AI在附近很快建设兵营】

12、After training our agents for an additional week, we played against MaNa, one of the world’s strongest StarCraft II players, and among the 10 strongest Protoss players. AlphaStar again won by 5 games to 0, demonstrating strong micro and macro-strategic skills. “I was impressed to see AlphaStar pull off advanced moves and different strategies across almost every game, using a very human style of gameplay I wouldn’t have expected,” he said. “I’ve realised how much my gameplay relies on forcing mistakes and being able to exploit human reactions, so this has put the game in a whole new light for me. We’re all excited to see what comes next.”【MaNa也被震撼到了,都是从来没有见过的招数】

相关信息:

- 「击败星际争霸II职业玩家」的 AlphaStar是在作弊?

- 星际争霸AI的真实能力

- AlphaStar: Mastering the Real-Time Strategy Game StarCraft II

- AlphaStar Resources

- Blizzard/s2client-proto

- Watch a visualisation of AlphaStar’s entire second game against MaNa

- Watch the exhibition game against MaNa

- Download 11 replays here